From ancient Greece to modern Washington, political memoirs have been irresistible source of gossip about great leaders

The Wall Street Journal, November 30, 2018

ILLUSTRATION: THOMAS FUCHS

The tell-all memoir has been a feature of American politics ever since Raymond Moley, an ex-aide to Franklin Delano Roosevelt, published his excoriating book “After Seven Years” while FDR was still in office. What makes the Trump administration unusual is the speed at which such accounts are appearing—most recently, “Unhinged,” by Omarosa Manigault Newman, a former political aide to the president.

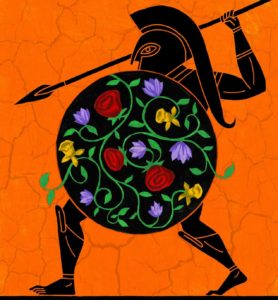

Spilling the beans on one’s boss may be disloyal, but it has a long pedigree. Alexander the Great is thought to have inspired the genre. His great run of military victories, beginning with the Battle of Chaeronea in 338 B.C., was so unprecedented that several of his generals felt the urge—unknown in Greek literature before then—to record their experiences for posterity.

Unfortunately, their accounts didn’t survive, save for the memoir of Ptolemy Soter, the founder of the Ptolemaic dynasty in Egypt, which exists in fragments. The great majority of Roman political memoirs have also disappeared—many by official suppression. Historians particularly regret the loss of the memoirs of Agrippina, the mother of Emperor Nero, who once boasted that she could bring down the entire imperial family with her revelations.

The Heian period (794-1185) in Japan produced four notable court memoirs, all by noblewomen. Dissatisfaction with their lot was a major factor behind these accounts—particularly for the anonymous author of ‘The Gossamer Years,” written around 974. The author was married to Fujiwara no Kane’ie, the regent for the Emperor Ichijo. Her exalted position at court masked a deeply unhappy private life; she was made miserable by her husband’s serial philandering, describing herself as “rich only in loneliness and sorrow.”

In Europe, the first modern political memoir was written by the Duc de Saint-Simon (1675-1755), a frustrated courtier at Versailles who took revenge on Louis XIV with his pen. Saint-Simon’s tales hilariously reveal the drama, gossip and intrigue that surrounded a king whose intellect, in his view, was “beneath mediocrity.”

But even Saint-Simon’s memoirs pale next to those of the Korean noblewoman Lady Hyegyeong (1735-1816), wife of Crown Prince Sado of the Joseon Dynasty. Her book, “Memoirs Written in Silence,” tells shocking tales of murder and madness at the heart of the Korean court. Sado, she writes, was a homicidal psychopath who went on a bloody killing spree that was only stopped by the intervention of his father King Yeongjo. Unwilling to see his son publicly executed, Yeongjo had the prince locked inside a rice chest and left to die. Understandably, Hyegyeong’s memoirs caused a huge sensation in Korea when they were first published in 1939, following the death of the last Emperor in 1926.

Fortunately, the Washington political memoir has been free of this kind of violence. Still, it isn’t just Roman emperors who have tried to silence uncomfortable voices. According to the historian Michael Beschloss, President John F. Kennedy had the White House household staff sign agreements to refrain from writing any memoirs. But eventually, of course, even Kennedy’s secrets came out. Perhaps every political leader should be given a plaque that reads: “Just remember, your underlings will have the last word.”