Our favorite Valentine’s Day flower was already a symbol of passion in ancient Greek mythology

February 13, 2021

“My luve is like a red red rose,/That’s newly sprung in June,” wrote the Scottish poet Robert Burns in 1794, creating an inexhaustible revenue stream for florists everywhere, especially around Valentine’s Day. But why a red rose, you might well ask.

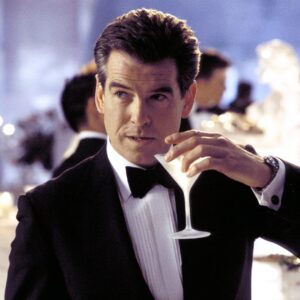

According to Greek myth, the blood of Aphrodite turned roses red.

PHOTO: GETTY IMAGES

Longevity is one reason. The rose is an ancient and well-traveled flower: A 55 million-year-old rose fossil found in Colorado suggests that roses were already blooming when our earliest primate ancestors began populating the earth. If you want to see where it all began, at least in the New World, then a trip to the Florissant Fossil Beds National Monument, roughly two hours’ drive from Denver, should be on your list of things to do once the pandemic is over.

In Greek mythology the rose was associated with Aphrodite, goddess of love, who was said to have emerged from the sea in a shower of foam that transformed into white roses. Her son Cupid bribed Harpocrates, the god of silence, with a single rose in return for not revealing his mother’s love affairs, giving rise to the Latin phrase sub rosa, “under the rose,” as a term for secrecy. As for the red rose, it was said to be born of tragedy: Aphrodite became tangled in a rose bush when she ran to comfort her lover Adonis as he lay dying from a wild boar attack. Scratched and torn by its thorns, her feet bled onto the roses and turned them crimson.

For the ancient Romans, the rose’s symbolic connection to love and death made it useful for celebrations and funerals alike. A Roman banquet without a suffocating cascade of petals was no banquet at all, and roses were regularly woven into garlands or crushed for their perfume. The first time Mark Antony saw Cleopatra he had to wade through a carpet of rose petals to reach her, by which point he had completely lost his head.

Rose cultivation in Asia became increasingly sophisticated during the Middle Ages, but in Europe the early church looked askance at the flower, regarding it as yet another example of pagan decadence. Fortunately, the Frankish emperor Charlemagne, an avid horticulturalist, refused to be cowed by old pieties, and in 794 he decreed that all royal gardens should contain roses and lilies.

The imperial seal of approval hastened the rose’s acceptance into the ecclesiastical fold. The Virgin Mary was likened to a thornless white rose because she was free of original sin. In fact, a climbing rose planted in her honor in 815 by the monks of Germany’s Hildesheim Cathedral is the oldest surviving rose bush today. Red roses, by contrast, symbolized the Crucifixion and Christian martyrs like St. Valentine, a priest killed by the Romans in the 3rd century, whose feast day is celebrated on Feb. 14. In the 14th century, his emergence as the patron saint of romantic love tipped the scales in favor of the red over the white rose.

The symbolism attached to the rose has long made it irresistible to poets. Shakespeare’s audience would have known that when Juliet compares Romeo to the flower—“that which we call a rose,/By any other name would smell as sweet”—it meant tragedy awaited the lovers. Yet they would have felt comforted, too, since each red rose bears witness, as Burns wrote, to the promise of love unbound and eternal: “Till a’ the seas gang dry, my dear,/And the rocks melt wi’ the sun.”